I just figured this out, and it’s too cool not to share. I have business grade switches at my house, so I have various VLANs setup already. You’ll need that in place to make this work, and have your port tagging in place already, etc.

This requires no additional configuration on the host. In the below, I’ve included two examples — default_lan and vlan5. So if you just want to give a container an IP on your local LAN, you can use default_lan for that. And if you’re looking to create a service on a vlan IP, you can use vlan5 as an example for that.

EDIT: YOU MAY NEED TO modprobe 8021q (and/or add it to /etc/modules)

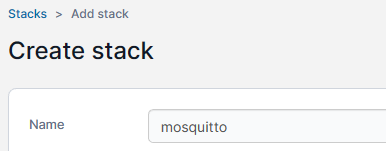

You do not need to include default_lan in order to use a vlan. This also of course works great in Portainer.

networks:

default_lan: # the name you'll reference in the service configuration

driver: ipvlan

driver_opts:

parent: enp1s0d1 # the interface on your docker host that it will tunnel through

ipam:

config:

- subnet: 10.1.1.0/24 # your networks subnet

gateway: 10.1.1.1 # your networks gateway

vlan5:

driver: ipvlan

driver_opts:

parent: enp1s0d1.5 # I've added '.5' for vlan 5

ipam:

config:

- subnet: 10.1.5.0/24 # the vlans subnet

gateway: 10.1.5.1 # the vlans gateway

services:

service_on_lan:

networks:

default_lan:

ipv4_address: 10.1.1.51

service_on_vlan:

networks:

vlan5:

ipv4_address: 10.1.5.55

I have not tested, but I believe you can also just add another two subnet and gateway lines for ipv6 routing as well, and then specify your ipv6_address in the service.

You can also use macvlan instead, which will give the container a unique MAC address that you can see on your network. I have found the best way to do this is individually per-IP, at least for my needs. Otherwise you can easily run into duplicate IP problems.

networks:

macvlan5_5: # the name you'll reference in the service configuration, and I give _5 as the IP

driver: macvlan

driver_opts:

parent: enp1s0d1.5 # the interface on your docker host and .# for the vlan #

ipam:

config:

- subnet: 10.1.5.0/24 # your networks subnet

gateway: 10.1.5.1 # your networks gateway

ip_range: 10.1.5.5/32 # the static ip you want to assign to this networks container

And then just assign the network in your container:

services:

service_on_macvlan5:

networks:

- macvlan5_5

Unfortunately, the container does not seem to try to register with the defined hostname so my firewall just sees a new ‘unknown’ host on the random MAC address in the arp tables.

Check out the complete Docker Network Drivers Overview page for more examples and usage.